Securing Edge-to-Edge Chain of Custody in Augmented and Predictive Analytics

Securing Edge-to-Edge Chain of Custody in Augmented and Predictive Analytics

Numerous digital entities in existence today incorporate elements of an Internet of Things (IoT) management framework. However, their contributions to advancing technology within the diverse realm of “IoT data management” are often limited. Established cloud-based IoT data aggregation and visualization platforms have been present significantly. These platforms operate on a cloud-based model, serving as platform-as-a-service solutions that receive data streams, store, aggregate, contextualize, compute, and visualize data. These platforms are not groundbreaking, leaving ample space for enhancement through innovative resources entering the market.

This article aims to accentuate the distinctions between low-speed Industry 4.0 interfacing

and high-speed data operations that necessitate elevated security measures, specific data transmission protocols, and data mining environments as well as how to assure the chain of custody of all your high-risk and compliance-oriented assets are confidentially secured with zero trust. Examples include clinical predictive analytics, which demands a secure transformation or even from the very beginning, the birth of groundbreaking data tools tailored for streaming, computation, visualization, and data aggregation.

A typical IoT-to-cloud environment encompasses the following components:

-

Data Capture/Ingestion: This involves the utilization of stream ingestion engines like Firehose, Kinesis, Vertex, Apache NiFi, etc., and various data streaming protocols, such as MQTT and HTTP, are employed based on bandwidth requirements. Alternatively, a high-speed data interface (HSDI) is utilized for more rapid data operations. Custom data streams can be established using tools like Apache MiniFi and Matlab. Organizations are using embedded systems like ARM TrustZone security to ensure data chain-of-custody security. In the clinical sector, a new data transmission protocol connecting ARM TrustZone and secure enclave security enforcement like SafeLiShare Confidential Clean Rooms on the zero trust cloud edge facilitates secure and federated clinical data sharing.

-

Security: While endpoint management software handles much of the security on the device side, the concept of enclave security is gaining prominence. Edge-to-edge enclave security is instrumental in ensuring secure data transmission from the device to the cloud. Solutions like SafeLiShare leverage enclave security to secure clinical data libraries, allowing safe sharing for modeling and maintaining a secure chain of custody. Enclave security also facilitates targeted data packet routing and stands as the most viable method for secure clinical data sharing.

-

Elastic Data Storage: For low-speed data contextualization, SQL suffices. However, data-intensive operations like clinical predictive analytics favor SQL-less database environments.

-

Aggregation or Contextualization: Data aggregation and contextualization engines, effectively transform raw data into actionable insights.

-

Containerization: Employing containerization technology like Kubernetes permits storing entire stream processing applications as a cohesive unit.

-

Computing Layer: Following data storage, a computing layer typically encompasses normalization, stream processing, and algorithmic processing from clinical predictive analytics to manufacturing.

-

Content Management: Certain data types, such as clinical predictive analytics, necessitate comprehensive record retention, including waveforms and specific reports. Within the IoT sphere, workflows need to be duplicated and presented in a user interface.

-

Data Visualization: Data visualization is achieved through tools ranging from simple dashboards to more advanced visualizations. Emerging technologies bridge the gap between gaming environments and sophisticated data visualization, delving into augmented reality and three-dimensional spaces.

In summary, while numerous digital entities play a role in IoT management, there is still significant space for innovation. A wide range of applications, spanning from low-speed interfacing to high-speed tasks such as clinical predictive analytics, underscores the demand for tailored solutions. The facets of security, data transmission protocols, storage, aggregation, computation, and visualization collectively define the IoT data management landscape, presenting opportunities for continuous growth and development.

In Industry 4.0, real-time sensor connections can inadvertently contain sensitive PII or HIPAA-related information that must be safeguarded with the utmost care. Achieving comprehensive security, including encryption at rest, in transit, and, crucially, during execution, is paramount. Cloud-edge augmented analytics or predictive analytics, where models and data are processed within secure enclaves using nano instances, offer a robust solution. SafeLiShare’s innovative approach allows organizations to choose between “bringing compute to data” or “bringing data to compute,” tailored to their specific regulatory compliance needs. We deliberately left out the key software solutions used in each stage of cloud edge ML or edge AI lifecycle. If you’re interested in delving deeper into this topic, we’d love to share our insights and reference designs with you. Let’s connect and discuss further by clicking this link: Link to SafeLiShare Demo. #Industry40 #DataSecurity #SafeLiShare #IoT #Analytics

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

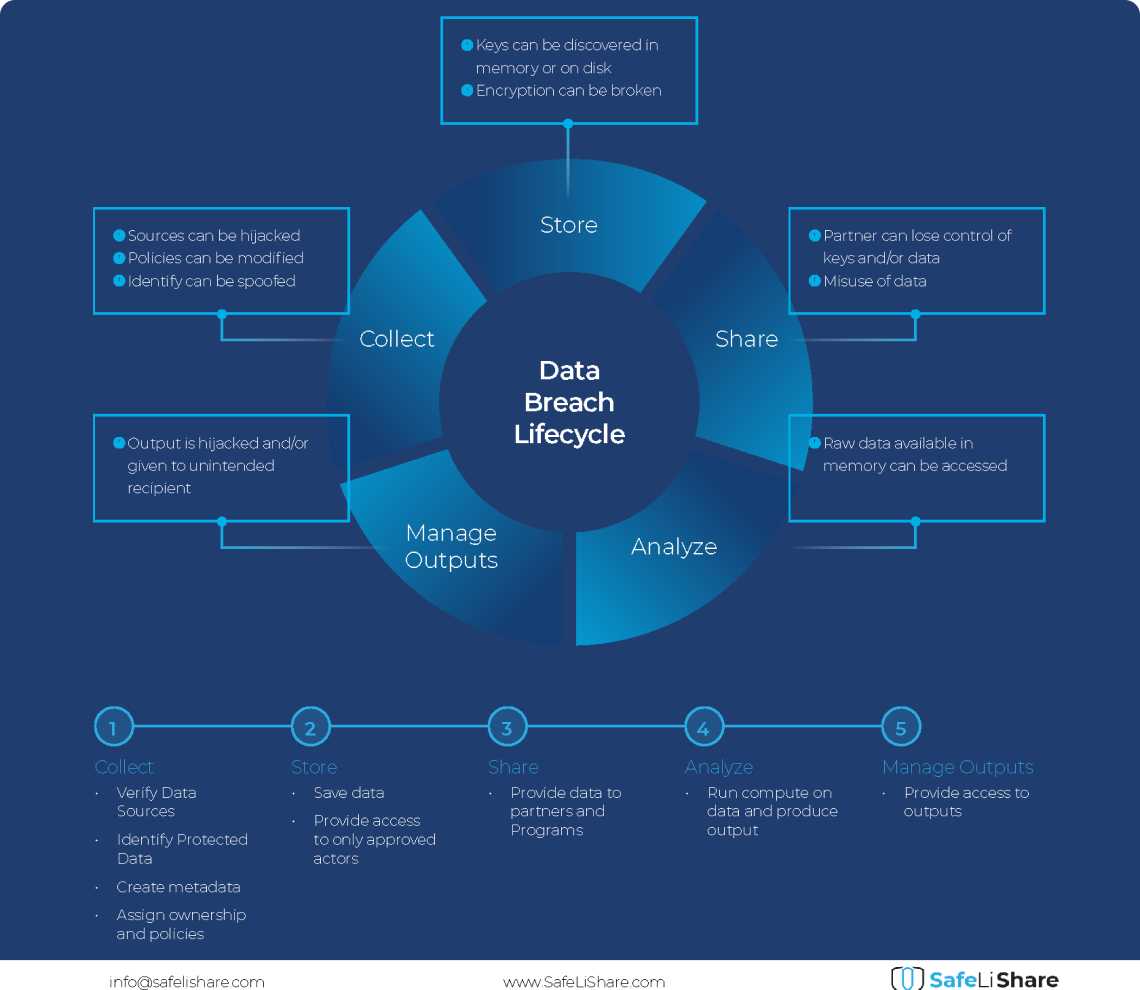

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.